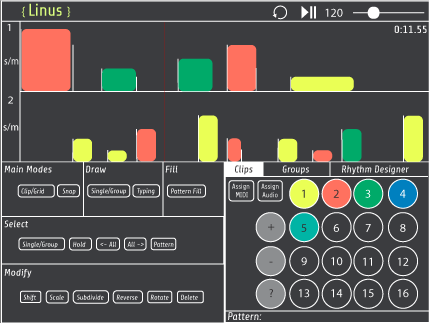

Rhythm Sequencer App

3/2016 - 5/2016

In progress. An iOS app for sequencing complex rhythms on a customizable grid. The design allows interpolation between two rhythmic patterns to create a hybrid pattern, among other unique tools.

GithubPaper (PDF)

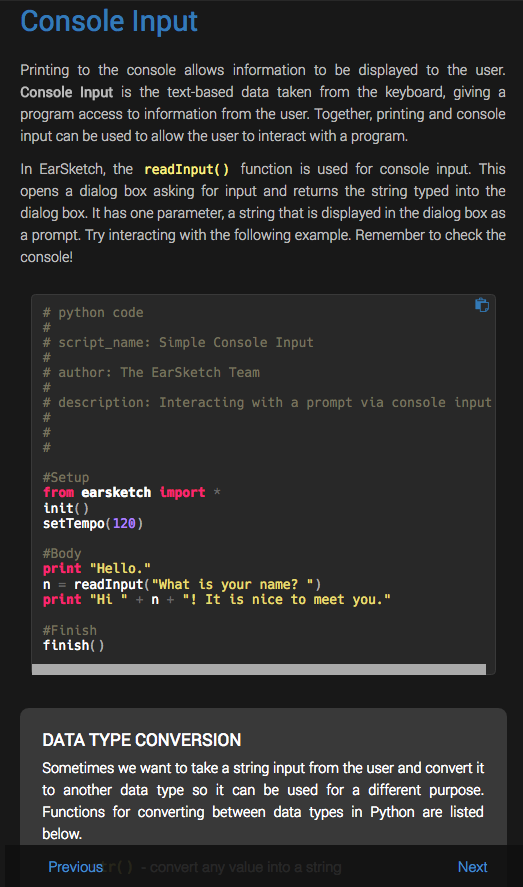

EarSketch Curriculum

8/2014 - 1/2016

I wrote and maintained an online A.P. computer science curriculum for EarSketch. EarSketch is a National Science Foundation-funded initiative that was created to motivate students to consider further study and careers in computer science. It is a web-based DAW / IDE for making music with code, and contains an integrated curriculum for teaching computer science, music technology, and music composition.

EarSketch SiteJavaScript Curriculum (PDF)

Python Curriculum (PDF)

Note: curriculum optimized for web, not PDF.

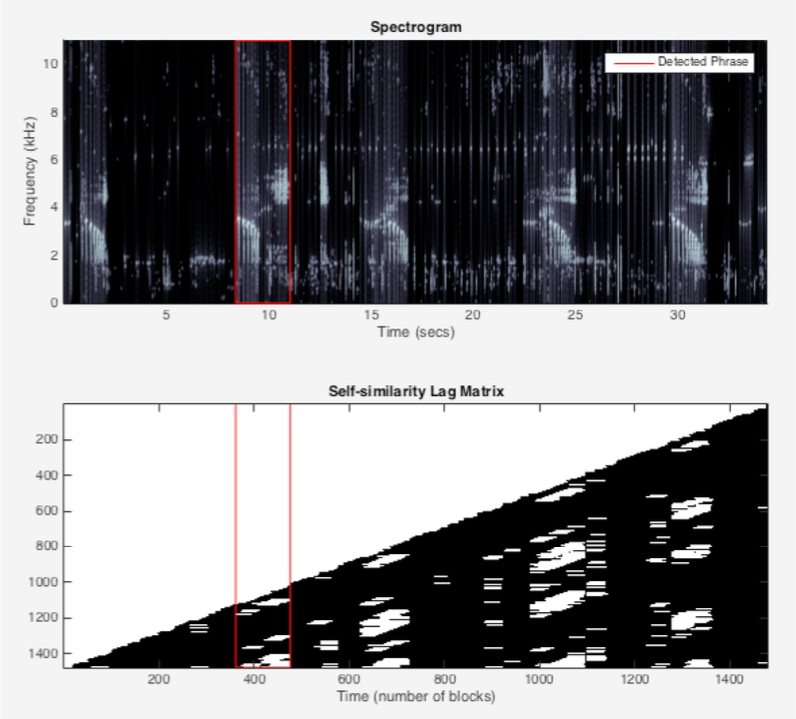

Birdsong Classifier

12/2016

Avrosh Kumar and I implemented a bird song classifier in Matlab, which was tested on the BirdClef data set. We used de-noising and musical segmentation techniques (especially the self-similarity lag matrix) on the data before training a k-Nearest Neighbor classifier.

Teammate: Avrosh Kumar

GithubPaper (PDF)

Beachball Synth

2/2015

As part of the Moog Hackathon at Georgia Tech, our team built a wireless synth controller designed to be embedded in a beachball. The controller sends gyro/accelerometer data to a computer, then to an arduino, and finally maps the beachball movement to controls on a Moog Werkstatt synthesizer. Our team received honorable mention.

Teammates: Hayden Riddiford and Charles “Julian” Knight

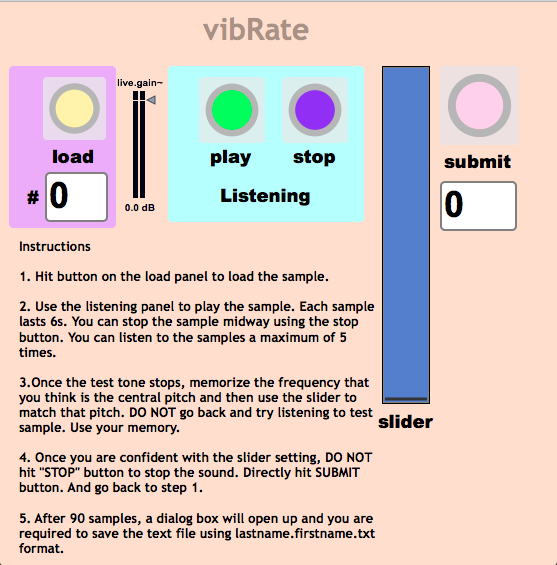

Vibrato & Pitch Perception

12/2014

A study on how the rate and depth of vibrato, modeled as frequency modulation here, affects a listener’s sense of central pitch. The data was acquired using human subjects and a system built in Max MSP. We found that vibrato rate had little effect on the listener’s ability to identify a central pitch. I designed the experiment, built the first versions of the Max patch, and co-wrote the paper.

Teammates: Deepak Gopinath, Alan Zhang, Brian Lee, Roozbeh Khodambashi

GithubPaper (PDF)

Robotic Drumming with Shimon

2/2016

Shimon is a Robotic marimba player living at the Georgia Tech Center for Music Technology. I replaced Shimon’s marimba with a set of drums and created an interactive system where Shimon responds to timbral changes in the drums (effected by a human performer). For example, bending a struck metal sheet was mapped to an increase in tempo, while other drums detected onsets and triggered new sections in the music. A band with Avrosh Kumar and Chris Latina was formed around this and performed at the Listening Machines 2016 concert.

Paper (PDF)Github

Concert Video (YouTube)

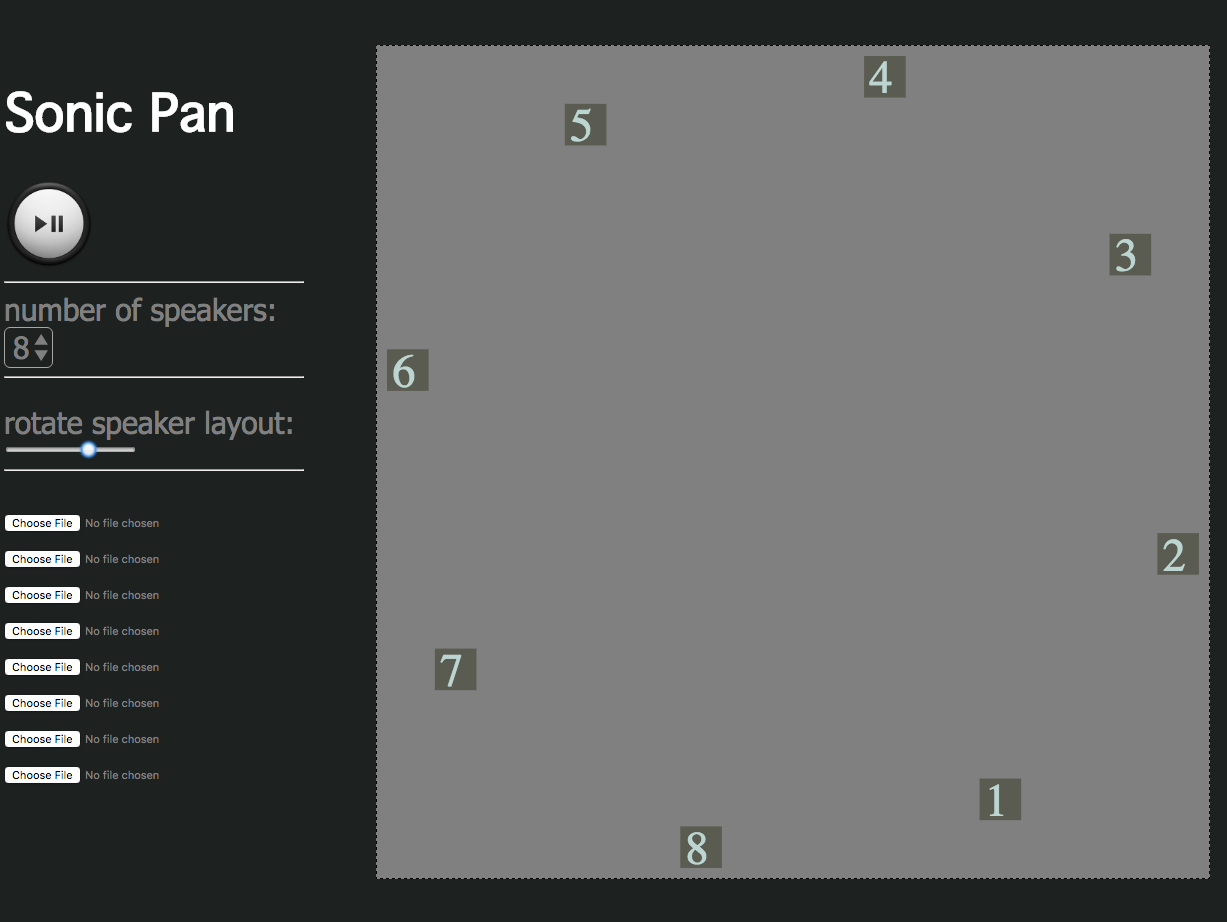

Web Surround Panner

3/2016

A simple website for testing surround panning using the Web-Audio API. A canvas shows speaker positions in the current audio setup, and mousing over the canvas pans the audio based on the mouse-position and speaker-positions. In progress.

Github

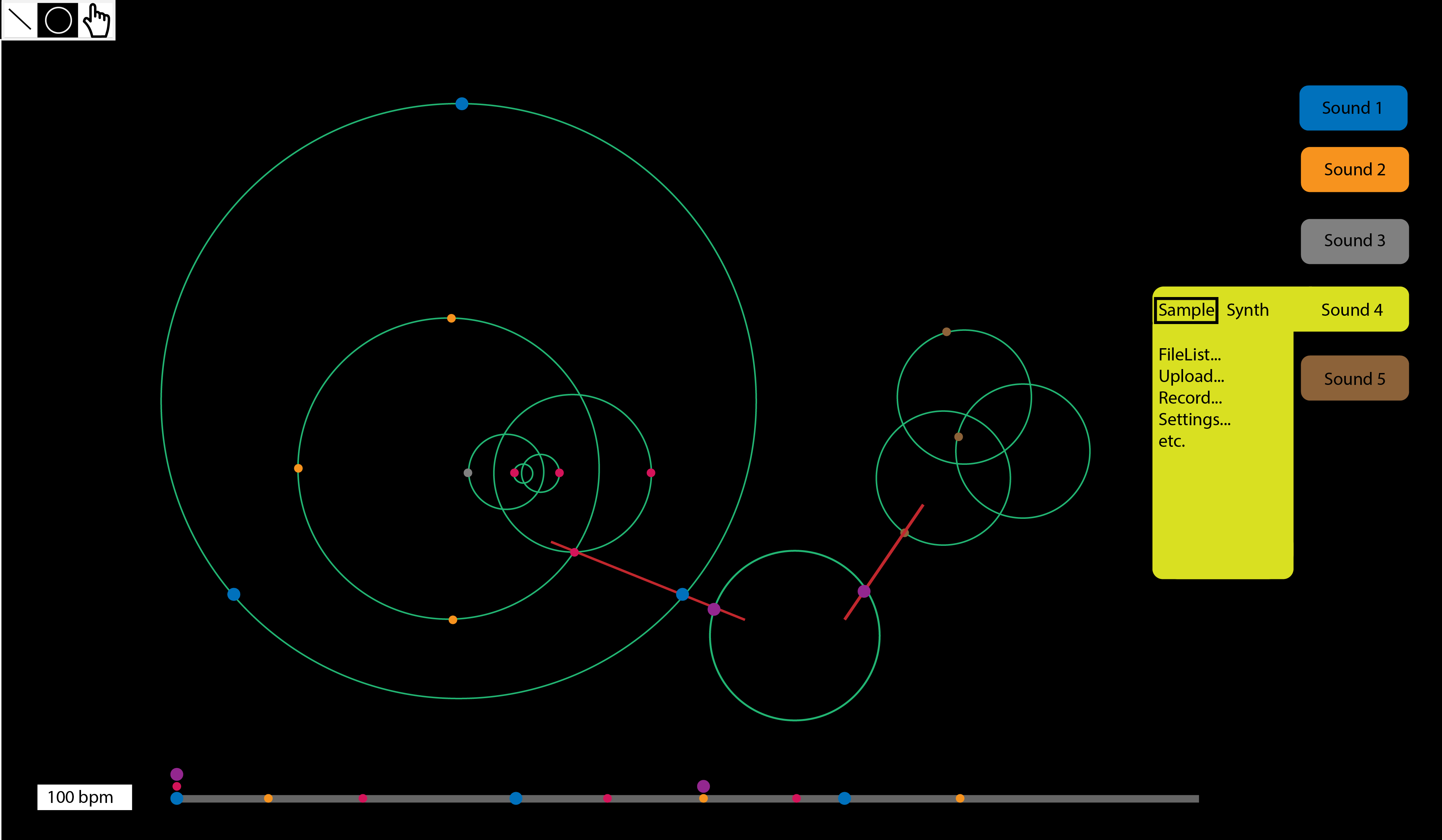

Circle Sounds

11/2014

A web-based audio-visual toy. The user can draw circles and place events on them, which rotate and make sound when colliding with other circles.

Github

Analysis: Tenney's Collage #1

11/2014

A visual and written analysis of James Tenney's "Collage No. 1 (Blue Suede)", one of the first pieces of music to sample popular music.

Paper (PDF)